Online Assessments

A crucial component of teaching critical thinking is designing assessments in which students can explore and examine potential solutions. To that end, I developed sophisticated cloud-based external graders for three major database systems: MySQL (a relational-SQL database), Neo4J (a graph database), and MongoDB (a document/collection database).

Cloud-Based External Auto-Grading Architecture

I developed three external graders that automatically generates new Docker container (virtual machine) with a database (e.g., SQL, MongoDB, and Neo4J) and load it with randomly generated data based on constraints defined by the question designer. With this grading infrastructure, I offered online database assessments where students develop and examine potential solutions. Because students receive new data instances for every new query attempt, they must develop a solution that works regardless of the data stored in the database.

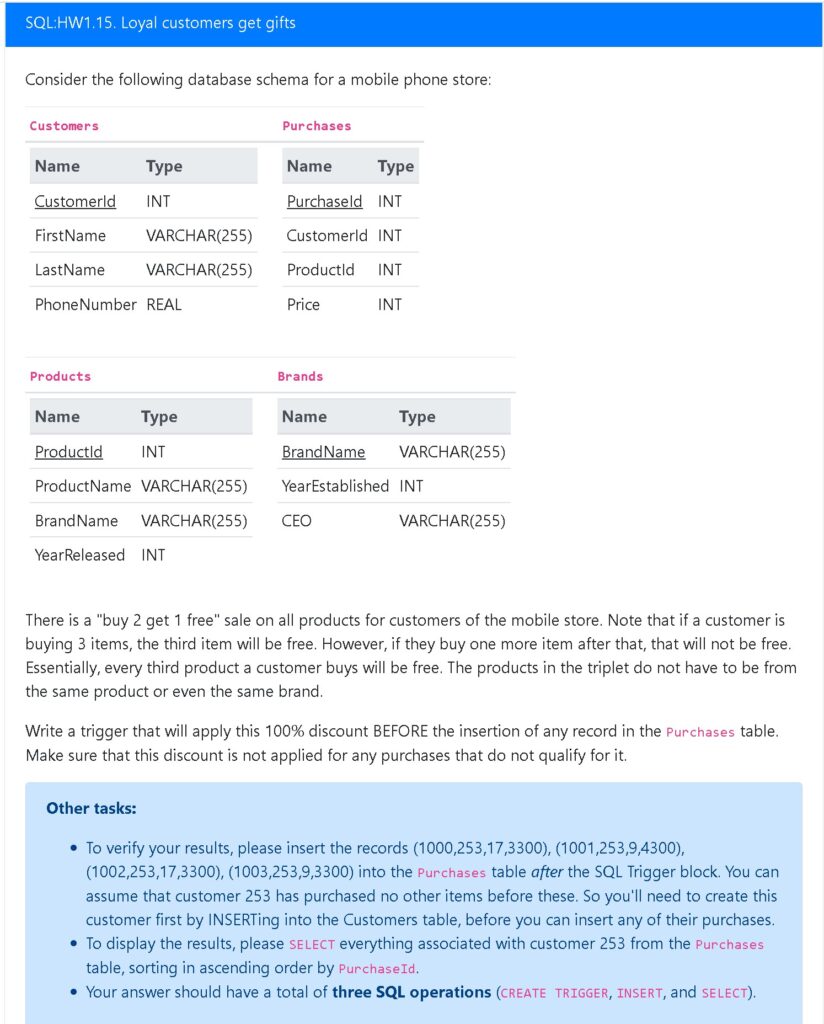

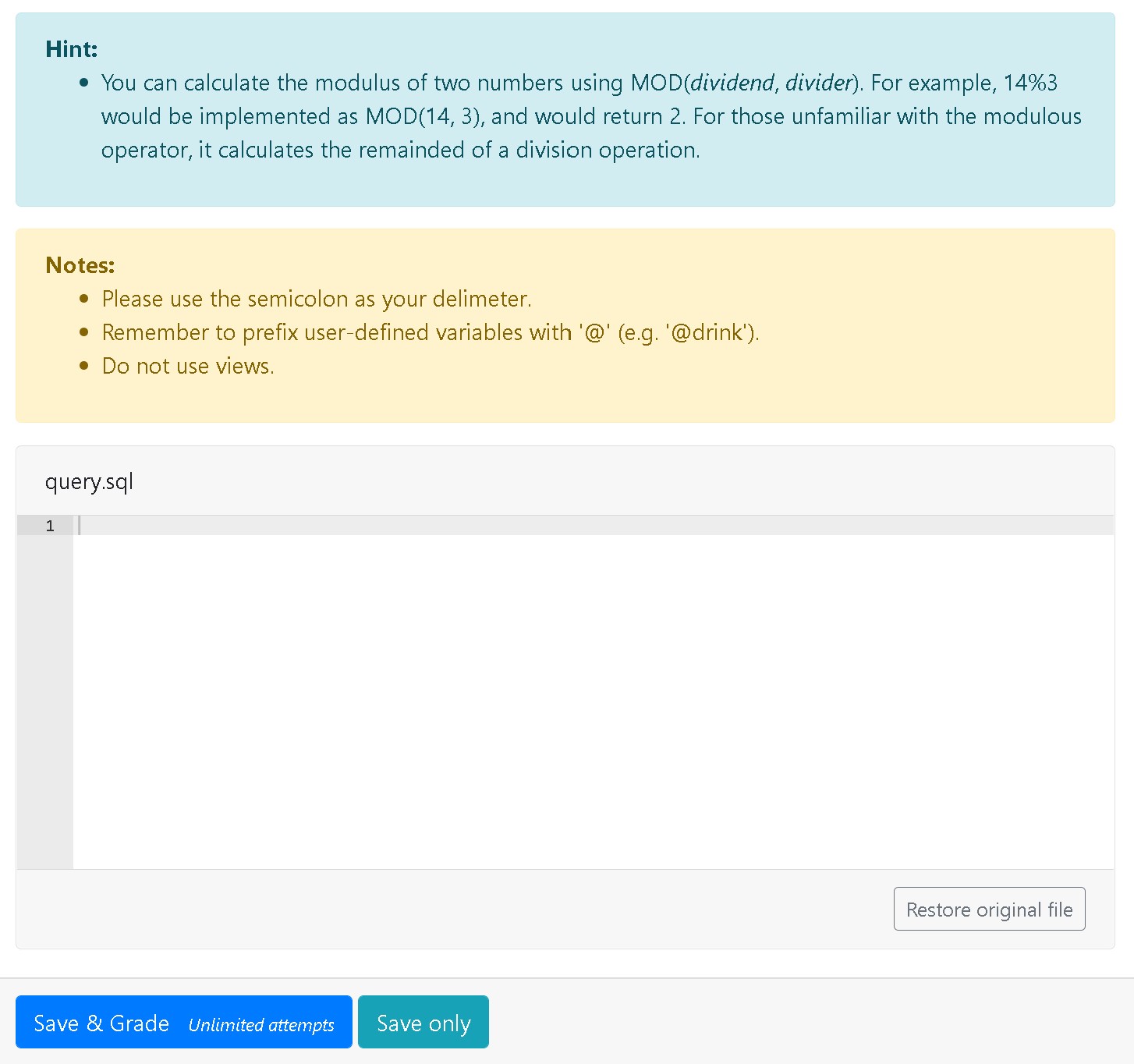

Students are given the database schema (i.e., they can see the table names and the name and type of each data value in the tables) relevant to the problem. Students can not see the data stored in the database itself. Below the schema, the students see the question prompt that their query must address. For this example, the database management system used for the queries is MySQL. The students have a simple code editor to write their query. The students have the option to save their submission, or select to save and grade.

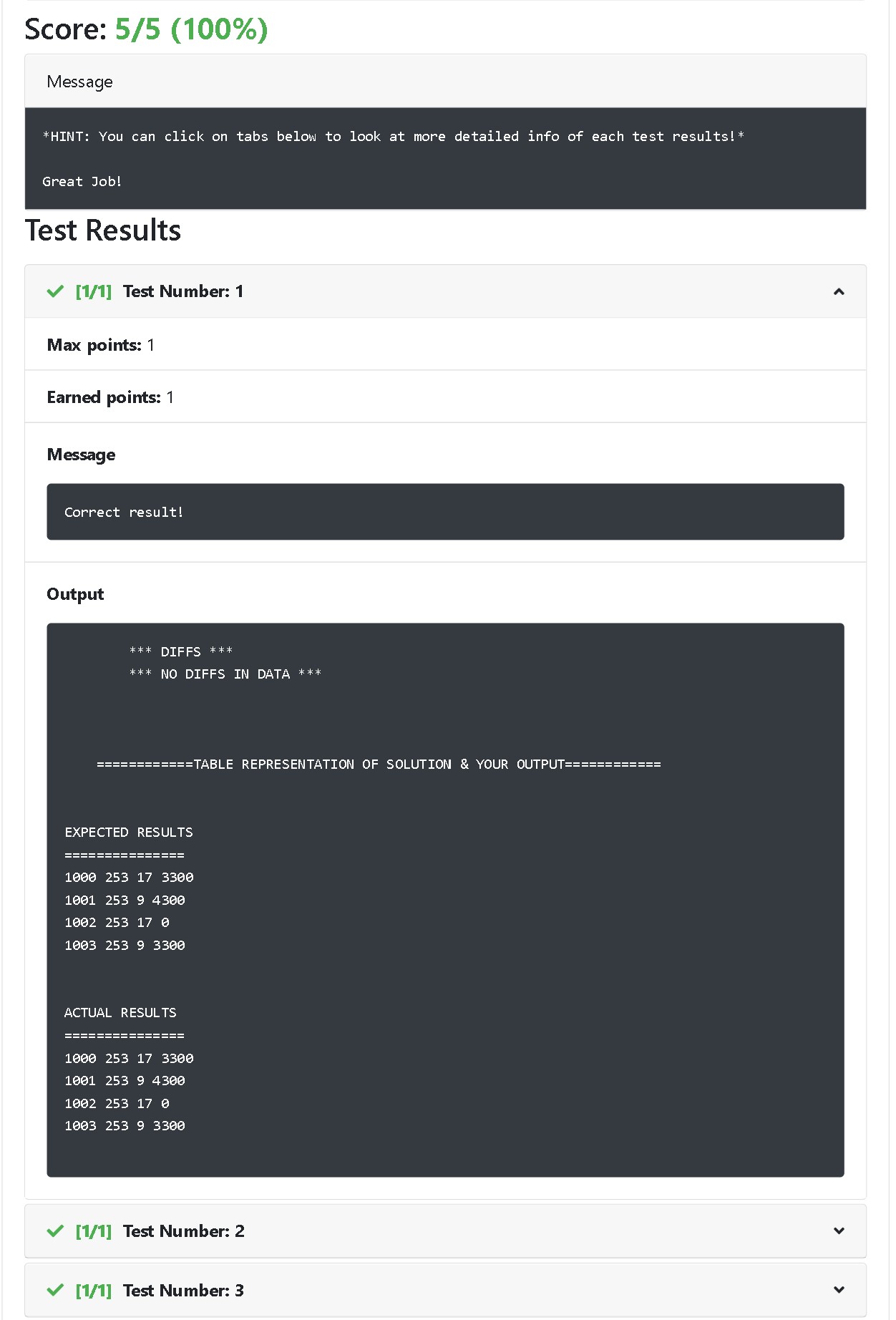

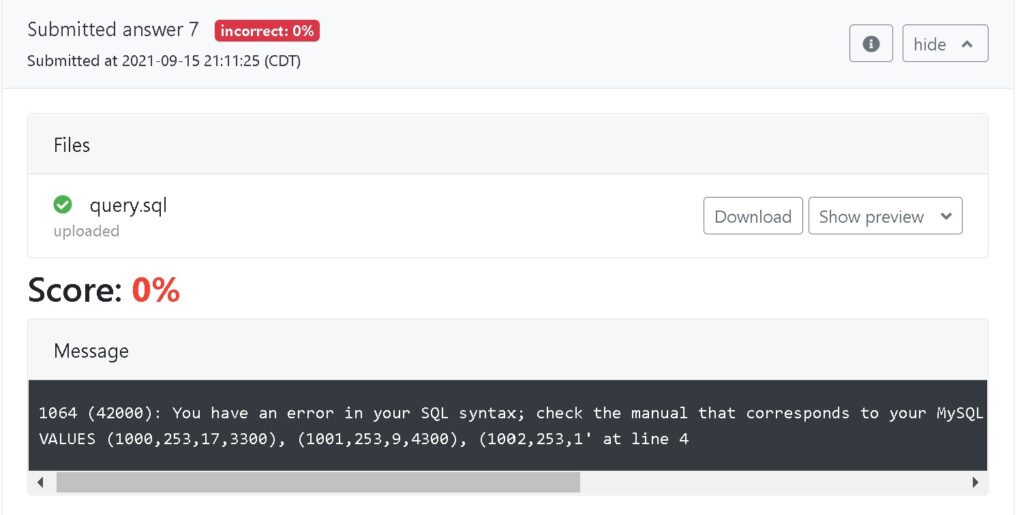

When the student selects to have their submission graded, their submitted query is run against the database. Should the query have a syntactic error, the MySQL error code and message is reported back and displayed to the user. If the query successfully runs, the student can see whether the query was correct (received full points) or contained a semantic error (did not receive full points). The expected results and actual results are displayed for feedback. Students are graded on functional correctness (i.e., the actual results matched the expected results). To avoid hardcoding, the autograder also generates random data instance every time a student submits a query.

Example of the autograder response for a student submission with a syntactic error:

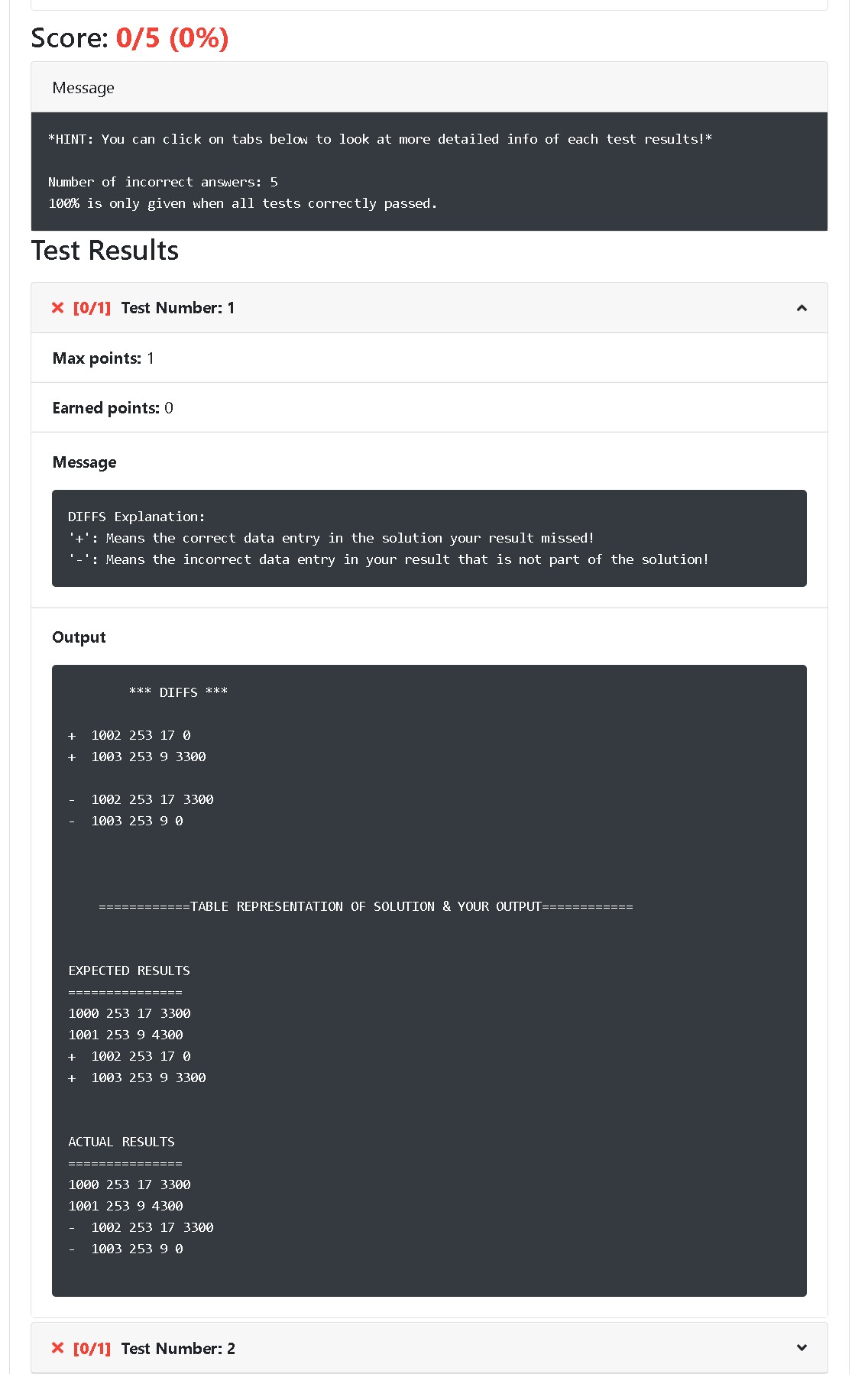

Example of the autograder response for a student submission with a semantic error:

As can be seen in the below figure, the student receive a detailed message with explanation of the differences between the correct query and their submission. In this example (as shown in the output section), the student has missed two correct records and included two incorrect records. Providing such insights can help students identify semantic errors in their queries.

Example of the autograder response for a student submission with a correct solution: